Chief learning officers are often asked to demonstrate the value of training. But most aren’t satisfied with the tools, resources or data available to them. Therefore, they can’t properly establish training impact.

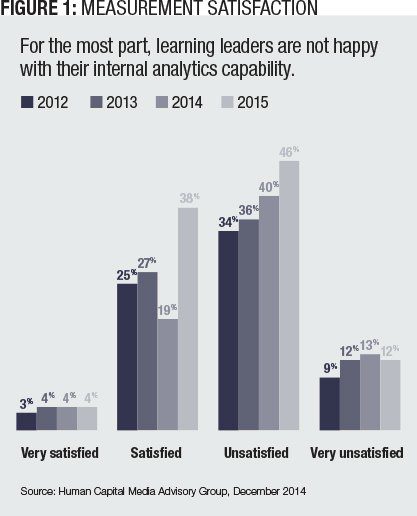

Organizations increasingly leverage analytics as a decision-making tool, but only 40 percent report their measurement programs are “fully aligned” with their learning strategy. This reflects an ongoing trend: The state of measurement in learning and development is falling behind other areas of the business. CLOs are more dissatisfied with their organizational approach to measurement this year than last, continuing a trend of the past three years.

Every other month, IDC surveys Chief Learning Officer magazine’s Business Intelligence Board to gauge the issues, opportunities and attitudes that make up the role of a senior learning executive. For this article, more than 330 CLOs shared their thoughts on learning measurement.

Overall, measurement collection and reporting can be rather basic. Some 3 out of 4 enterprises use general training output data — courses, students and hours of training — to help justify training impact. Measurements for stakeholder and learner satisfaction with training programs are completed about 3 out of 5 times. Only about half report that training output is aligned with specific learning initiatives.

Even more advanced measurement applications such as employee engagement, employee performance or business impact, are still constrained by time, resources and the lack of a solid measurement structure and is measured by a third or fewer enterprises.

It’s All About the Data

Learning professionals generally agree on the value of measurement. When done properly, it can demonstrate training impact to the company’s top and bottom lines. Effective measurement of training impact also ensures organizational initiatives will more frequently include appropriate learning components. This helps the learning organization increase its relevance.

Key metrics include employee performance, speed-to-proficiency, customer satisfaction and improved sales numbers. The challenge remains in gaining access to data, and finding the time and resources to conduct measurement.

In the 2008, most enterprises reported a high level of dissatisfaction with the state of training measurement. By 2010, that feeling had moderated substantially as analytics became mainstream in other areas of the business. But once CLOs could compare their capabilities with capabilities in other business areas, their dissatisfaction increased. In 2013, 2014 and again in 2015, there has been more dissatisfaction — partially resulting from higher expectations combined with continuing challenges around resources and leader support (Figure 1).

Some CLOs report:

- “We don’t have the right technology.”

- “There is no accountability model and clear expectations from the top down.”

- “We don’t have a wide enough breadth and depth of measurement areas, nor a consistent way of gathering data for the variety of training methods we use.”

The distance between the learning organization’s role within the enterprise and its measurement capability is still wide. When the measurement programs are weak, most CLOs report their influence and role in helping achieve organizational priorities is also weak. However, when organizations are very satisfied with their measurement approach, they tend to believe learning and development plays an important role in achieving organizational priorities.

Compared with prior years, the common forces working against measurement program satisfaction remain a combination of capability and support. CLOs believe their ability to effectively deploy effective measurement process is limited by: lack of resources, lack of management support and an inability to bring data together from different functions.

Resources and leadership support are essential to develop an effective measurement program. But one respondent said, “There is no accountability model and clear expectations from the top down.” Another agreed, but suggested: “Learning is not important until we have an issue (regulatory, corporate audit).”

Leadership plays a critical role in how valued learning measurement is to the organization. One CLO said, “We are relatively new at digging into measurement and successes when it comes to training. Our executive leadership has only wanted to see how many people have attended what classes.” Others report moving a program requires more effort — “We need to work harder on specifically communicating business results and ROI.”

Systems need to work too. One CLO said: “Our systems don’t communicate with one another, making it very difficult to get the information needed for measurement.” Or, sometimes the data is there, but “we don’t access all the data that exists and need to find a way to integrate it all into a cohesive picture upon which we act.”

With the various forces aligned to retard advancing measurement, there has been little change in its use for learning and development. Consistent with past results, about 80 percent of companies do some measurement, slightly more use a manual process and fewer use some form of automated system (Figure 2).

The mix of approaches is similar to how it was performed as far back as 2008. There has only been a slight decrease in the percentage of respondents who indicate they use a manual process and a significant increase in the percentage of enterprises that LMS data predominantly.

CLOs can only use the tools they have, but systems used for data collection seem to correlate to degree of satisfaction. The data source that causes the most dissatisfaction is learning impact data captured directly from an enterprise resource planning, or ERP, system.

None of the CLOs who report an ERP as their source for learning metrics are satisfied with their organization’s training measurement. On the other hand, leveraging LMS data plus ERP data, or leveraging manually collected data with LMS data result in greater satisfaction (Figure 3). This suggests ERP data is not specific enough to evaluate training impact. But alone LMS data is also insufficient.

Tying Training to Outcomes Improves

There has been a meaningful increase in the percentage of enterprises correlating training to organizational change. Almost half of enterprises measure training’s effect on customer satisfaction. While it is improving, only about half of organizations evaluate training impact on employee engagement and less than 40 percent measure productivity and employee retention. Though training programs aren’t always expected to affect quality or retention, there is a real opportunity for analytics to establish training impact on a wider set of business outcomes (Figure 4).

Effective measurement can affect the broader enterprise and the training organization’s bottom line. Organizations that consistently tie training to specific changes are more likely to train, but their activities are often more targeted on the most appropriate people and topics so they spend less money and time on training overall.

It’s Not All Bad

Despite the challenges, organizations are making progress. As Figure 1 showed, while 42 percent of CLOs are somewhat satisfied with the measurement efforts at their companies, nearly 60 percent are planning to increase the training impact measures they undertake.

CLOs will be developing measurement programs to track training impact on employee productivity and overall business performance. About half of enterprises that don’t currently will begin to track training impact on employee engagement. Measuring the impact of training on customer satisfaction and employee retention are also on many CLO agendas.

There are three significant practices CLOs can take to effectively demonstrate training impact:

• Set expectations with stakeholders. Help stakeholders understand the commitment required to see assessment projects through to the end. This will reduce resistance encountered during the measurement phase. Engaged stakeholders can help align necessary support from other parts of the organization.

• Define success early. By defining what success will look like upfront, CLOs can more easily identify and benchmark key metrics for measurement before training is delivered, and make post-training results easier to evaluate.

• Establish metrics at the project or business-unit level. While it may be tempting to ‘swing for the fences’ and demonstrate training’s value at the enterprise level, successful measurement programs typically start as smaller initiatives that focus on projects or at the business-unit level. Working with smaller groups typically creates fewer obstacles to interfere in the measurement process.

Companies that incorporate these simple guidelines into their assessment methodology should see a marked improvement in the success and relevance of their measurement initiatives and training overall.